You probably already know transformers have a maximum sequence length, and you probably also know that by default anything beyond this limit is ignored. But what happens if you can’t afford to truncate the inputs? You’re probably looking at a sliding window approach, where you extract subsets of text and process each in turn.

Normally I just split on whitespace, but I’ve been interested in trying out NLTK’s sentence tokenizer. Recommending resumes for a free-form text query is as good an excuse as any. 🙂

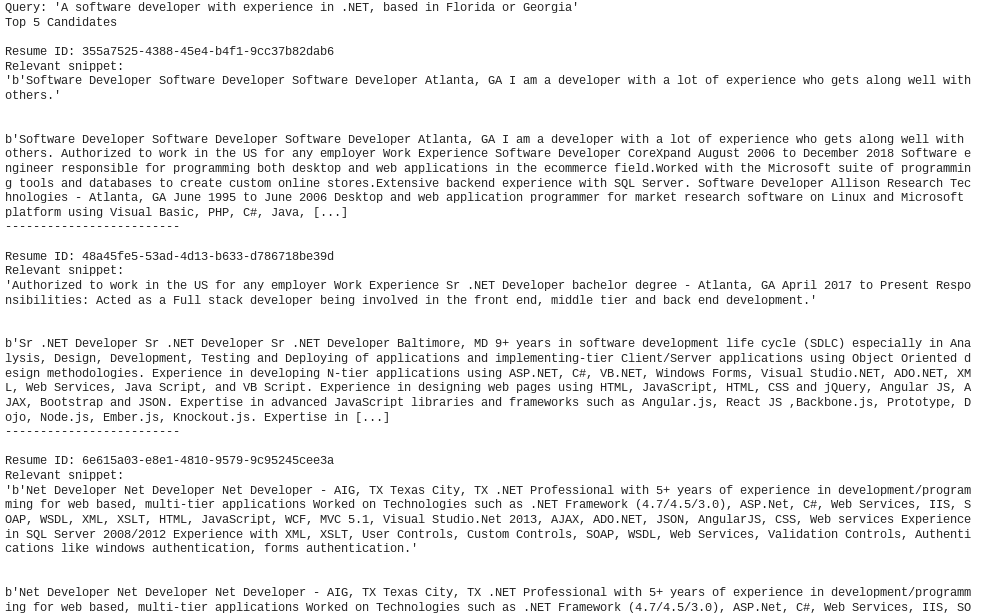

The final approach lets you use natural language queries and tries to return the most relevant results. Here’s a sample, where I asked for .NET developers in Florida or Georgia:

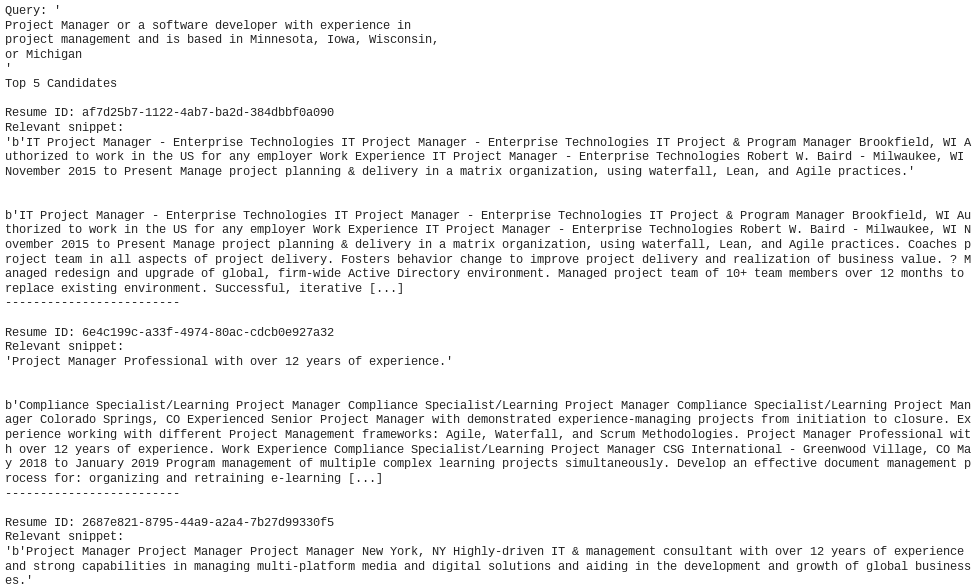

Notice how the two most relevant results are .NET developers in Atlanta? I think the recommender ran out of GA .NET devs after that and started returning .NET developers in general. A similar thing happens when I ask it for project managers in MN or WI:

It is encouraging though that the approach tries to match location and job description, and then falls back to just the JD when it runs out of local candidates. Have a look at the notebook for the full output!