Today I’m open sourcing a proof of concept for applying LLMs to SEO.

My main interest here was to try out a couple of theories I had about LLMs; I won’t pretend to know much about SEO but in a nutshell here’s how everything works.

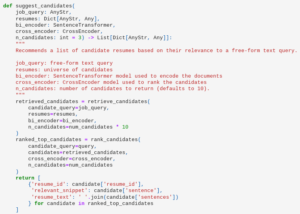

- Conduct a web search and fetch the contents of e.g. the top 10 results.

- Clean the contents and cluster the text.

- Find the largest clusters and their centroids; the theory is that the largest clusters represent the words or phrases used most often in top results and the centroids are good representations of each cluster. You can think of the centroids as common themes that the top search results all shared, so the theory is that we can improve our site’s rank for that particular search by picking up on these themes.

- Present the centroids to the LLM along with the original search, and have the LLM make recommendations based on the search and the words that seem to show up the most often in the top results.

From a coding perspective I wanted to try out a theory I had that now that chat-style APIs have become the de facto standard for LLMs, it should be fairly straightforward to stick to simple REST requests and still support multiple LLMs. I’ve kept the modules for Mistral and OpenAI support separate for now in case the prompts differ, but so far I haven’t seen them diverge.

From the NLP perspective my main interest was combining conventional NLP techniques with LLMs, in this case trying to answer the question “Can we have an LLM interpret NLP results?” So far at least I think the results are promising, even when the SEO themes are a little junky both Mistral and OpenAI LLMs are able to offer some generic good advice for SEO.